First I will talk about the problem and then will briefly discuss about SCSI reservation. Some of the contents are from support personal. This is just to spread knowledge and has nothing to do with credit.

Problem : We had 5 ESX host cluster running 3.5 U4 on DL380 G5 with 32 GB of memory with 8 logical CPU.

Suddenly we realize that 3 of the ESX host were disconnected from Virtual Center. I restart “ mgmt-vmware ” service but no luck. I then disconnected the ESX from VC and also removed it but still we were not able to add the host. Finally I decided to login to ESX using VC client and it failed. That is the time we realize there is some serious issue.

I then tried to CD /vmfs/volumes and it was giving me message

I checked all the ESX host and each one has same issue. I can ‘cd’ into any directory but not vmfs. This confirm that there is something happened with vmfs volume.

I ran qlogic utility “iscli” and tried to perform HBA level troubleshooting. HBA does report issue

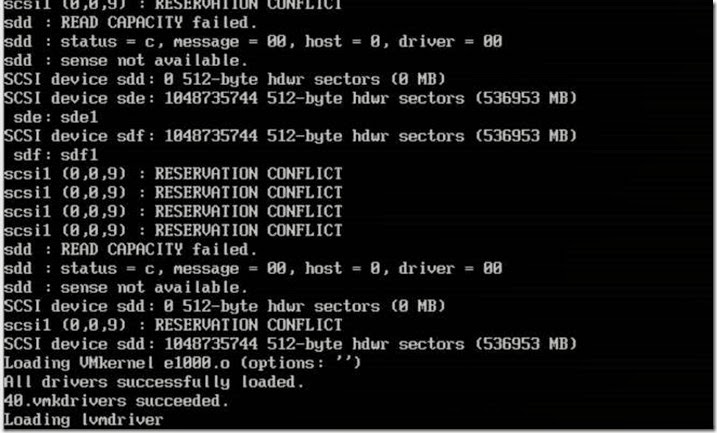

I checked vmkernal log which made me very suspicious . It had lots of SCSI reservation related issue. All the 3 ESX host had similar issue .

Since it being production scenario, I decided not to waste time and contact VMware for possible solution. After having webex with VMware , VMware passed following recommendation to break fix this issue

- put each ESX into maintenance mode (only 1 at a time)

- allow vm's to be migrated off

- reboot esx

- repeat procedure for each esx.

OR

- Failover the active storage processor (Contact SAN vendor for assistance with this)

Well I can not put ESX into ‘MM’ since all the ESX is sharing the lun. Though only 3 has reported but other two ESX host are also not able to see the lun. I checked with Storage admin for possible fail over and he decline since Filer is also used by other application. Finally I decided to reboot the esx host which was shortcut to fix the issue with maximum outage . After rebooting ESX host I saw following messages into the console

After rebooting ESX host it was not able to come back online. One the ESX host came online after waiting for 1 hour. Once it appeared in VC I found that none of the lun were mounted. I ran rescan and without any success. I did ran HBA level troubleshooting and found that I was able to reach filer IP from HBA bios. I then found that target IP were of vswif (Virtual interface on NetAPP filer to all physical NIC are tied for fault tolerance and redundancy ). Just for testing I added physical IP of HBA of the target filer and ran rescan . I was able to all the VM’s .

I checked with Network folks and they confirmed that there were routing issue between vswif network and ISCSI network. They are on two different VLAN (Not very sure why?)

I then but rest of the host into recovery mode and disable HBA. After it came online fast and I then added correct target IP .

Thus we fix this issue.

So now the question why it happened ?

SCSI reservations are used during the snapshot process, it seems there is a stale scsi reservation on the storage / hba which can cause snapshot to fail & performance problems.

So what is SCSI Reservation ?

ESX uses a mechanism of "locking" called "scsi reservation" to share luns between ESX hosts. These "reservations" are non-persistent and are released when they require activity is completed. The Service Console regularly monitors the luns and checks for an "reservations" that have aged to old. The ESX host will then try releasing the lock. If however another application running from the Service Console is using the lun, it can immediately reclaim the "lun" or place another "reservation". Thus, if 3rd party applications are not design to release their locks, we see a continuous flood of heartbeat reclaiming events in the logs.

SCSI reservations are needed to prevent any data corruption in environment where LUNs are shared between many hosts.

Every time a host tries to update the VMFS metadata it needs to put SCSI reservation on it.

When 2 hosts try to reserve the same LUN at the same time a scsi reservation conflict occurs, if the number of reservation conflicts is to big the ESX will fail the I/O.

SCSI reservations, status busy messages and Windows symmpi errors can be a sign of SAN latency or failure to release scsi file locks when requested. The SAN Storage Processors can hold the reservation which requires a lun reset or a SP reboot.

VMware ESX 3.0.x and 3.5 use " Exclusive Logical Unit Reservations" (SCSI-2 Style Reservations). These reservations are used whenever VMkernel applies changes to the VMFS metadata. This prevents multiple hosts from concurrently writing to the metadata and is the process used to prevent VMFS corruption. After the Metadata update is done, the reservation is released by vmkernel.

Examples of VMFS operations that require metadata updates are:

* Creating or deleting a VMFS data store

* Expanding a VMFS data store onto additional extents

* Powering on or off a VM

* Acquiring or releasing a lock on a file

* Creating or deleting a file

* Creating a template

* Deploying a VM from a template

* Creating a new VM

* Migrating a VM with VMotion

* Growing a file (e.g. a Snapshot file or a thin provisioned Virtual Disk)

When the VMkernel requests a SCSI Reservation of a LUN from the storage array, it attempts the metadata updates of the VMFS volume on the LUN. If the LUN is still reserved by another host and the reservation has not been granted to this host yet, the I/O fails due to reservation conflict and the VMkernel retries the command up to 80 times. The VMkernel log shows the “Conflict Retries” (CR) count remaining which counts down to zero. The retry interval is randomized between hosts to prevent concurrent retries on the same LUN from different hosts which improves the chances of acquiring a reservation.

If the number of retries have been exhausted, the I/O fails due to too many SCSI Reservation conflicts.

Areas of scsi reservation contention

-- 3rd party software (agents)

-- firmware on SAN SP/HBA

-- pathing

-- Heavy I/O

Therefore the way to mitigate the negative impact of scsi reservations is to try:

1. Try to serialize the operations of the shared LUNs, if possible do not run operations on different hosts that require scsi reservation at the same time.

2. Increase the number of LUNs and try to limit the number of ESX hosts accessing the same LUN.

3. Avoid using snapshots as this causes a lot of scsi reservations.

4. Do not schedule backups ( vcb or console based ) in parallel from the same LUN.

5. Try to limit the number of VMs per lun. What targets are being used to access luns.

6. Check if you have the latest HBA firmware across all ESX hosts

7 Is the ESX running the latest BIOS (avoid conflict with HBA drivers) 8. Contact your SAN vendor for information on SP timeout values and performance settings and storage array firmware..

9. Turn off 3rd party agents (i.e. storage agents) ...

10. MSCS rdms (active node holds permanent reservation)

11. Ensure correct Host Mode setting on the SAN array

12. LUNS removed from the system without rescanning can appear as locked.

The Storage Processor fails to release the reservation, either the request didn’t come through (hardware, firmware, pathing problems) or 3rd party apps. running on service console didn’t send release. Or busy VM operations still holding lock.

Some data Stolen from : http://kb.vmware.com/kb/1005009 Troubleshooting SCSI Reservation failures on Virtual Infrastructure 3 and 3.5

Some unanswered question:

This bring other question into my mind, so even if you have HA/DRS still you can have outage situation. Fault tolerance ? how can that help if you messed up routing ? what someone pulled the HBA cable itself ? HA will occur if you have host failure or Service Console down. If you don’t have either of them then HA event will not occur. So we need to plan storage level HA, why don’t VMware provide abilities to take care at storage level HA as well? Yes I know since it involve multiple vendor, but can’t VMware work with them?

6 comments:

Good example of starting at the lowest levels of the stack and working your way up. When things look funny at one level and everything looks "right" look at the layer below. You might just find the issue.

Congrats on the fix.

Thanks Ian for your comment. It’s all stolen from you :)

Vikash/Ian - it's interesting that you were able to restore access to the LUNs by changing the IP address used by the iSCSI. We have experienced the exact same issue twice now, however, we are using FC and NetApp.

5 ESX hosts,... The problem seems to originate from an issue with VCB and a backup snapshot failing.

The only way we were able to restore access to the LUNs, regardless of the number of times the ESX hosts were rebooted, was to reboot each of the NetApp filers.

I'll book mark this blog and update if we find anything as we have opened cases with both NetApp and VMware.

Very good post by the way.

This article is very informative and very well described.

ya i got what i was looking for....nice explannation

Post a Comment