I was trying to mount NFS datastore on vSphare 4.0 from my netapp sim 7.3 version and I am getting following error message while mounting under vmkernel

WARNING: NFS: 898: RPC error 13 (RPC was aborted due to timeout) trying to get port for Mount Program (100005) Version (3) Protocol (TCP) on Server (x.x.101.124)

[root@xxx ~]# esxcfg-route

VMkernel default gateway is x.x.100.1

[root@xxx ~]# vmkping -D

PING x.x.100.140 (x.x.100.140): 56 data bytes

64 bytes from x.x.100.140: icmp_seq=0 ttl=64 time=0.434 ms

64 bytes from x.x.100.140: icmp_seq=1 ttl=64 time=0.049 ms

64 bytes from x.x.100.140: icmp_seq=2 ttl=64 time=0.046 ms

--- x.x.100.140 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss round-trip min/avg/max = 0.046/0.176/0.434 ms PING x.x.100.1 (x.x.100.1): 56 data bytes

64 bytes from x.x.100.1: icmp_seq=0 ttl=255 time=0.999 ms

64 bytes from x.x.100.1: icmp_seq=1 ttl=255 time=0.837 ms

64 bytes from x.x.100.1: icmp_seq=2 ttl=255 time=0.763 ms

--- x.x.100.1 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss round-trip min/avg/max = 0.763/0.866/0.999 ms [root@xxx]# ping x.x.101.124 PING x.x.101.124 (10.1.101.124) 56(84) bytes of data.

64 bytes from x.1.101.124: icmp_seq=1 ttl=254 time=0.507 ms

64 bytes from x.1.101.124: icmp_seq=2 ttl=254 time=0.554 ms

64 bytes from x.1.101.124: icmp_seq=3 ttl=254 time=0.644 ms

--- x.1.101.124 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1999ms rtt min/avg/max/mdev = 0.507/0.568/0.644/0.060 ms

netappsim1> exportfs

/vol/vol0/home -sec=sys,rw,nosuid

/vol/vol0 -sec=sys,rw,anon=0,nosuid

/vol/vol1 -sec=sys,rw,root=x.x.130.17

Well there are few guidelines which should be followed while mounting NFS datastore on ESX host.

Here the list of requirement

1. Only root should have access to the NFS volume.

2. Only one ESX host should be able to mount NFS datastore.

How do we approach to achieve this ?

1. Create a volume on NetAPP filer choosing one of the aggregate. Once the volume is create we need to export the volume.

2. This can be done from CLI and to do it from CLI

srm-protect> exportfs -p rw= 10.21.64.34,root=10.21.64.34 /vol/vol2

srm-protect> exportfs

/vol/vol0/home -sec=sys,rw,nosuid

/vol/vol0 -sec=sys,rw,anon=0,nosuid

/vol/vol1 -sec=sys,rw=10.21.64.34,root=10.21.64.34

Here something to remember that the IP address 10.21.64.34 is of VMKERNAL which should be created before mounting NFS volume over ESX host.

While creating VMKERNEL ensure that IP should be in same subnet that’s of NFS server or else you will have error above. After changing IP subnet I was able to mount the NFS datastore

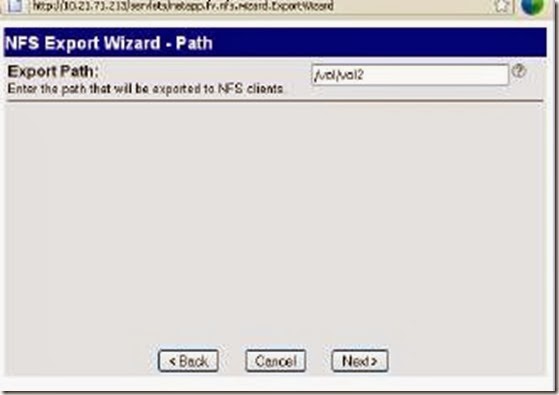

3. From “Filer view ” NFS > Manage Exports:

In the Export Options window, the Read-Write Access and Security

check boxes are already selected. You will need to also select the Root Access

check box as shown here. Then click Next:

Leave the Export Path at the default setting:

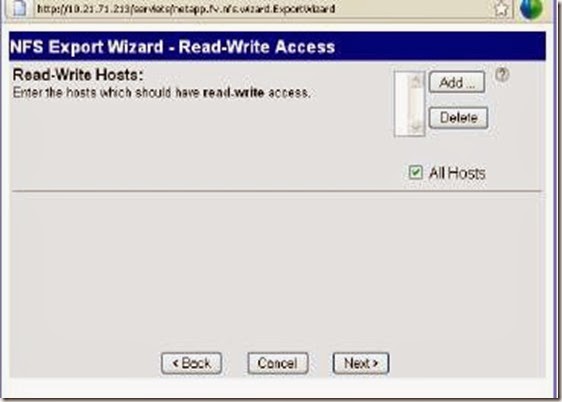

In the Read-Write Hosts window, click on Add to explicitly add a RW host.Here you need to mention IP address of VMKERNEL and not service console of the ESX host or else the rights will not be applied .

Populate the Host to Add with the (VMkernel) IP address:

The Read-Write Hosts window should now include my VMkernel I

address. Click Next to move to the Root Hosts window:

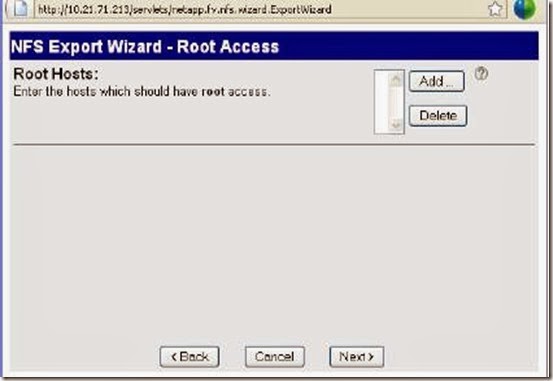

Populate the Root Hosts exactly the same way as the Read-Write

Hosts by clicking on the Add button. This should again be the VMkernel/IP

Storage IP address. When this is populated, click Next:

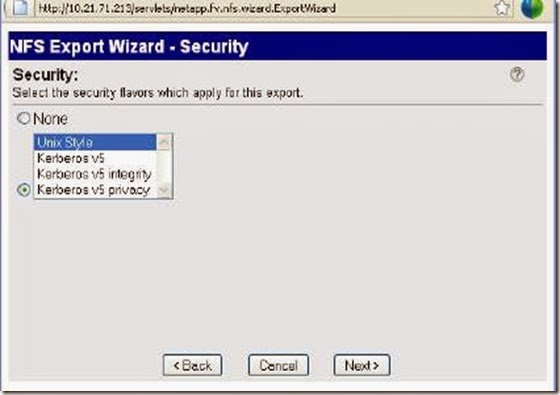

At the Security screen, leave the security flavour at the default of

Unix Style and click Next to continue: