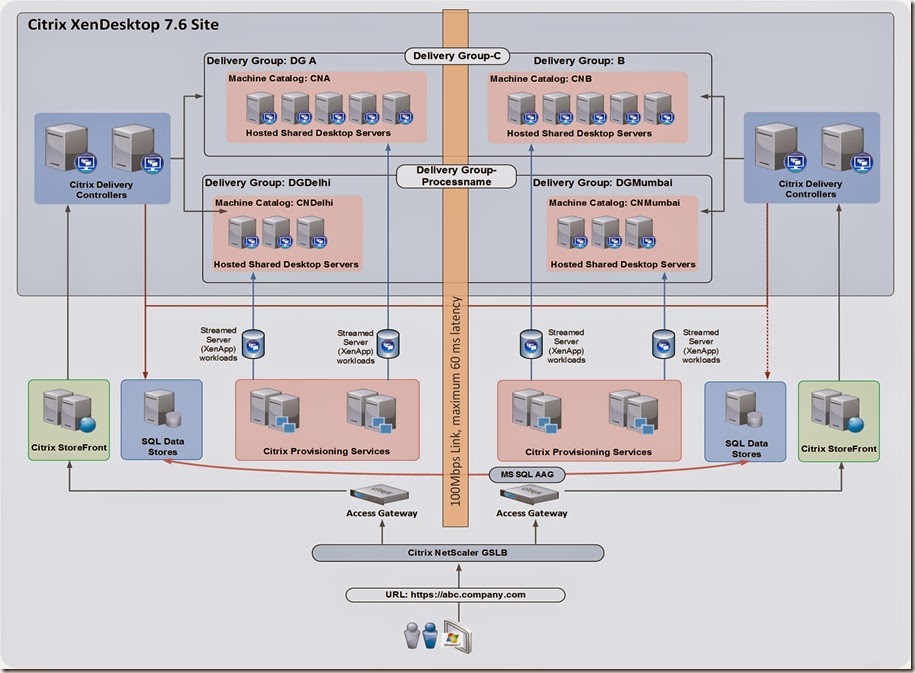

We get requirement quite often to load balance users across datacenter and provide DR with XenDesktop. There are tons of article which will help you to design so. But what we are discussing here is load balancing users within delivery group.

Requirement : Load balance users delivery group across datacenter. If there are 100 users in a particular use case then 50 users should be directed to datacenter A and 50 users should be redirected to datacenter B.

Challenge: It would have been easy if we had to just load balance users. We could have used GSLB and distribute users in round robin fashion. But when it comes to delivery group this has its own challenge. To achieve this we do require single farm architecture. To build single farm architecture we do require SQL availability across the location. Challenge is with the amount of require bandwidth and latency within India. In general latency across two cities in India is around 60ms.

Gotchas: Profiles availability across datacenter. Microsoft does not support profile replication. So if we need profiles with the users then we must use two separate store at each datacenter.

How to achieve this: To start with I will put some drawing to make it simple.

Component configuration:

Two Delivery Controller at both datacenter: Total of four Delivery Controller will be part of the single XenApp/XenDesktop Site. But here is the catch, VMS at respective DC will be pointing to respective Delivery Controller . So VMS will be register with only with respective site delivery controller.

Two StoreFront at both the datacenter: Two storefront will be in cluster at each of the datacenter. NetScaler will be used to load balance each of the storefront farm across datacenter.

Separate PVS farm at each of the datacenter: Each of the farm will be streaming VM’s in their respective datacenter.

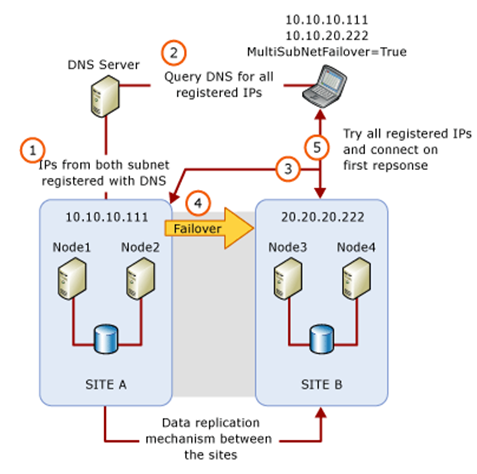

Now coming to the important part is SQL setup. There are many ways we can setup SQL for database replication and I am not going to explain those. You can refer to article like this to get that configure. What I will explain you is how we did the setup in our environment. Two SQL has been setup with Multi Subnet fail over cluster and something similar explained here

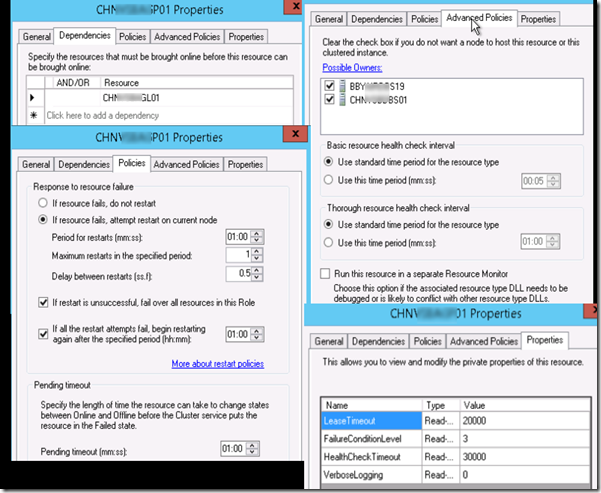

In our case we have one node at each of the site. WFC has been setup with the following roles

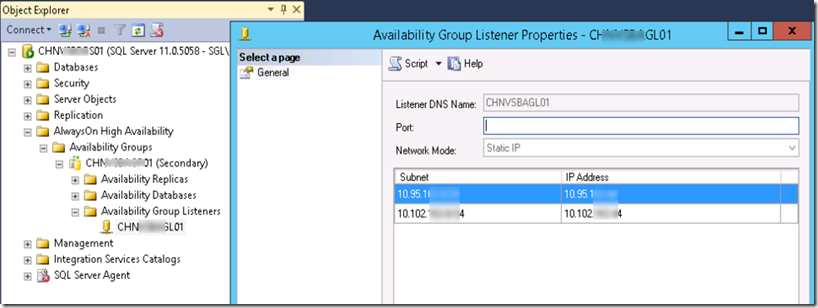

Under the server name there are two IP’s and this is used for Availability Group Listeners under SQL

WFC resource property is important to understand for failover .

This is how listener group looks like

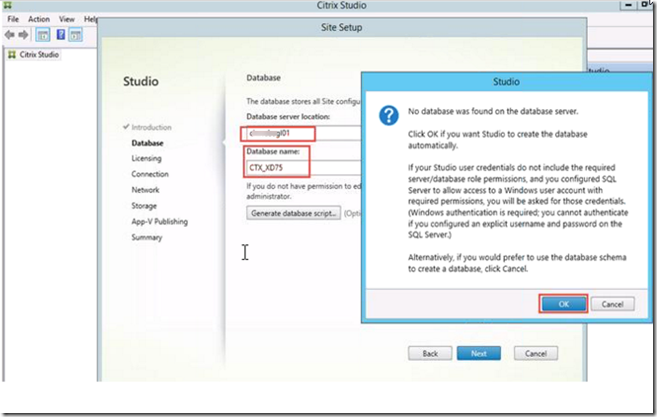

We are replicating three database a)Site b)Logging c)Monitoring using AlwaysOn High Availability. During site creation we pointed it to listener database and allow Studio to create database. Once database were setup, It was then moved to Always ON group.

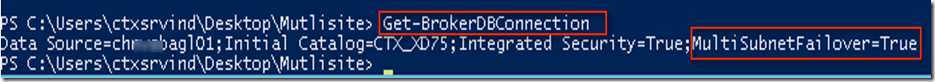

Then we separated all the database and moved separately. Now time for testing? No before we start testing we have to follow few more steps to ensure Delivery Controller is MultiSubnet aware and logins are replicated. To do so I followed Citrix blog and download the script listed here. Now its time for powershell magic. Open powershell from desktop studio and check if you have all the scripts. We need to run Change_XD_TO_MultiSubnetFailover.ps1

Once script executed then it will be upload

Post this when we run get-brokerDBConnection it will showing multisubnetfailover=true.

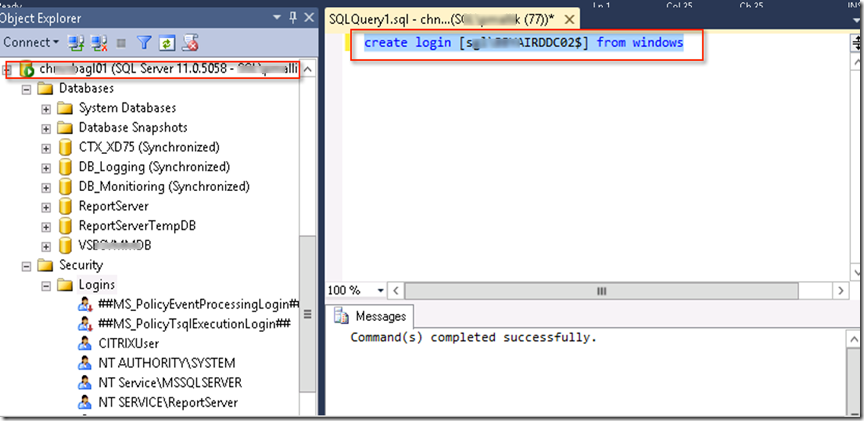

Now make sure logins for all the DDC is created on replica database .

DDC is ready for fail over testing. Now we need to create delivery group for datacenter A and map catalog corresponding catalog. Separate delivery group for datacenter B mapped to catalog for respective dc.

Now we need to publish desktop to this both deliver group.

Add-BrokerApplication -Name "Publised App Name" -DesktopGroup "Delivery Group A"

Add-BrokerApplication -Name "Publised App Name" -DesktopGroup "Delivery Group B"

So what will be the end result: Users will hit GSLB which will deploy users in round robin fashion across datacenter. Users will land on one the LB Store Front server and will get access to application. Users will be load balanced in round robin fashion but will land on same delivery group. Delivery group which doesn’t have priority (Fail over priority can be defined for delivery group) defined. Delivery group is going to distribute users across the VM . Incase of one of datacenter goes down SQL connection will failover to other site. This will have to wait till DNS update happen and listner group IP is changed to other site. Then we will be using connection leasing feature of XD 7.6 which is similar to LHC of XenApp 7.6 .

Drop a note incase you have question.

3 comments:

This is an excellent article I have come across I am trying to achive the same between 2 datacenters where the link between both have a latency of 19 Ms.

I would love to get in touch with you.

If you can provide me your details I would appreciate it or maybe even if I could get your email id where I can start an intial conversation.

Hi, it is good document, but how did you overcome the challenge of the management for the Hypervisor? VMware vCenter, SCVMM for Hyper-V, etc.

Also, how about persistent virtual desktops?

Hypervisor is beyond my scope :) but still I will try to answer. We build two independent control for managing Hypervisor. Persistent desktop is a different ballgame. We need to worry about replicating data and both the site settings is something out of scope since MS doesn't support or else it has to be Active Passive DC.

HTH

Post a Comment