Wednesday, December 16, 2009

The Parent Virtual Disk has been modified since child was created for VMware Workstation

HOW TO for Windows and VMware Workstation:

Using this article as a baseline, instead of "grep" use this CMD command: find "CID" "x:\folder\base-file.vmdk"

Use CTRL+C to abort the listing after the first few lines.

Take note of the CID=xxxxxxxx

If not already done so, download and install HEX EDIT FREE (I used v.3.0f)

NOTE: Since the vmdk-files can contain both CID and the whole disk file, they are both fragile AND HUGE! Take special caution when editing these files. A backup copy should always be taken and made write protected!!

This is also the reason why you need a special editor (like HEX edit) to edit the file the right way.

When ready, start hex edit and open the first snapshot file (ex. base-file-000001.vmdk)

Find the "parent=" string in the ascii section of the editor and place the cursor in front of the first letter. Start typing the CID from the base-file.

If prompted, say YES to disable write protection BUT NO to disable Replace mode. This is important to keep the file exactly the way it was, only changing the CID value.

Repeat/Apply this process to other . vmdk files in your chain, if other snapshot files in the middle of the chain has been opened.

Good luck people with all your restores.

And PLEASE VMware, make your programs start doing a check on this, when mounting a new hard drive file! ;)

Monday, December 14, 2009

Deep Dive Into SCSI Reservation

First I will talk about the problem and then will briefly discuss about SCSI reservation. Some of the contents are from support personal. This is just to spread knowledge and has nothing to do with credit.

Problem : We had 5 ESX host cluster running 3.5 U4 on DL380 G5 with 32 GB of memory with 8 logical CPU.

Suddenly we realize that 3 of the ESX host were disconnected from Virtual Center. I restart “ mgmt-vmware ” service but no luck. I then disconnected the ESX from VC and also removed it but still we were not able to add the host. Finally I decided to login to ESX using VC client and it failed. That is the time we realize there is some serious issue.

I then tried to CD /vmfs/volumes and it was giving me message

I checked all the ESX host and each one has same issue. I can ‘cd’ into any directory but not vmfs. This confirm that there is something happened with vmfs volume.

I ran qlogic utility “iscli” and tried to perform HBA level troubleshooting. HBA does report issue

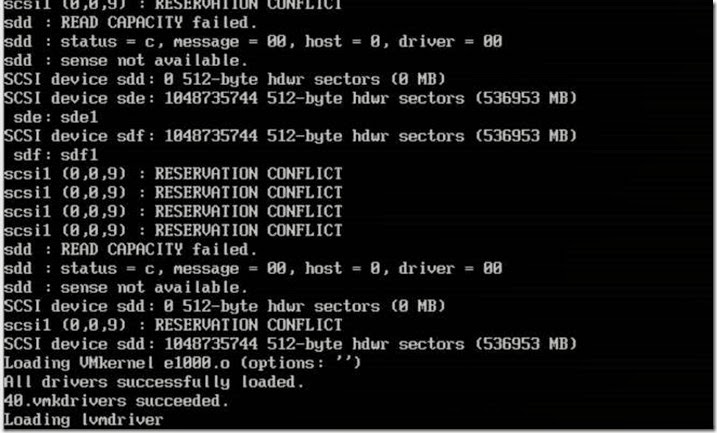

I checked vmkernal log which made me very suspicious . It had lots of SCSI reservation related issue. All the 3 ESX host had similar issue .

Since it being production scenario, I decided not to waste time and contact VMware for possible solution. After having webex with VMware , VMware passed following recommendation to break fix this issue

- put each ESX into maintenance mode (only 1 at a time)

- allow vm's to be migrated off

- reboot esx

- repeat procedure for each esx.

OR

- Failover the active storage processor (Contact SAN vendor for assistance with this)

Well I can not put ESX into ‘MM’ since all the ESX is sharing the lun. Though only 3 has reported but other two ESX host are also not able to see the lun. I checked with Storage admin for possible fail over and he decline since Filer is also used by other application. Finally I decided to reboot the esx host which was shortcut to fix the issue with maximum outage . After rebooting ESX host I saw following messages into the console

After rebooting ESX host it was not able to come back online. One the ESX host came online after waiting for 1 hour. Once it appeared in VC I found that none of the lun were mounted. I ran rescan and without any success. I did ran HBA level troubleshooting and found that I was able to reach filer IP from HBA bios. I then found that target IP were of vswif (Virtual interface on NetAPP filer to all physical NIC are tied for fault tolerance and redundancy ). Just for testing I added physical IP of HBA of the target filer and ran rescan . I was able to all the VM’s .

I checked with Network folks and they confirmed that there were routing issue between vswif network and ISCSI network. They are on two different VLAN (Not very sure why?)

I then but rest of the host into recovery mode and disable HBA. After it came online fast and I then added correct target IP .

Thus we fix this issue.

So now the question why it happened ?

SCSI reservations are used during the snapshot process, it seems there is a stale scsi reservation on the storage / hba which can cause snapshot to fail & performance problems.

So what is SCSI Reservation ?

ESX uses a mechanism of "locking" called "scsi reservation" to share luns between ESX hosts. These "reservations" are non-persistent and are released when they require activity is completed. The Service Console regularly monitors the luns and checks for an "reservations" that have aged to old. The ESX host will then try releasing the lock. If however another application running from the Service Console is using the lun, it can immediately reclaim the "lun" or place another "reservation". Thus, if 3rd party applications are not design to release their locks, we see a continuous flood of heartbeat reclaiming events in the logs.

SCSI reservations are needed to prevent any data corruption in environment where LUNs are shared between many hosts.

Every time a host tries to update the VMFS metadata it needs to put SCSI reservation on it.

When 2 hosts try to reserve the same LUN at the same time a scsi reservation conflict occurs, if the number of reservation conflicts is to big the ESX will fail the I/O.

SCSI reservations, status busy messages and Windows symmpi errors can be a sign of SAN latency or failure to release scsi file locks when requested. The SAN Storage Processors can hold the reservation which requires a lun reset or a SP reboot.

VMware ESX 3.0.x and 3.5 use " Exclusive Logical Unit Reservations" (SCSI-2 Style Reservations). These reservations are used whenever VMkernel applies changes to the VMFS metadata. This prevents multiple hosts from concurrently writing to the metadata and is the process used to prevent VMFS corruption. After the Metadata update is done, the reservation is released by vmkernel.

Examples of VMFS operations that require metadata updates are:

* Creating or deleting a VMFS data store

* Expanding a VMFS data store onto additional extents

* Powering on or off a VM

* Acquiring or releasing a lock on a file

* Creating or deleting a file

* Creating a template

* Deploying a VM from a template

* Creating a new VM

* Migrating a VM with VMotion

* Growing a file (e.g. a Snapshot file or a thin provisioned Virtual Disk)

When the VMkernel requests a SCSI Reservation of a LUN from the storage array, it attempts the metadata updates of the VMFS volume on the LUN. If the LUN is still reserved by another host and the reservation has not been granted to this host yet, the I/O fails due to reservation conflict and the VMkernel retries the command up to 80 times. The VMkernel log shows the “Conflict Retries” (CR) count remaining which counts down to zero. The retry interval is randomized between hosts to prevent concurrent retries on the same LUN from different hosts which improves the chances of acquiring a reservation.

If the number of retries have been exhausted, the I/O fails due to too many SCSI Reservation conflicts.

Areas of scsi reservation contention

-- 3rd party software (agents)

-- firmware on SAN SP/HBA

-- pathing

-- Heavy I/O

Therefore the way to mitigate the negative impact of scsi reservations is to try:

1. Try to serialize the operations of the shared LUNs, if possible do not run operations on different hosts that require scsi reservation at the same time.

2. Increase the number of LUNs and try to limit the number of ESX hosts accessing the same LUN.

3. Avoid using snapshots as this causes a lot of scsi reservations.

4. Do not schedule backups ( vcb or console based ) in parallel from the same LUN.

5. Try to limit the number of VMs per lun. What targets are being used to access luns.

6. Check if you have the latest HBA firmware across all ESX hosts

7 Is the ESX running the latest BIOS (avoid conflict with HBA drivers) 8. Contact your SAN vendor for information on SP timeout values and performance settings and storage array firmware..

9. Turn off 3rd party agents (i.e. storage agents) ...

10. MSCS rdms (active node holds permanent reservation)

11. Ensure correct Host Mode setting on the SAN array

12. LUNS removed from the system without rescanning can appear as locked.

The Storage Processor fails to release the reservation, either the request didn’t come through (hardware, firmware, pathing problems) or 3rd party apps. running on service console didn’t send release. Or busy VM operations still holding lock.

Some data Stolen from : http://kb.vmware.com/kb/1005009 Troubleshooting SCSI Reservation failures on Virtual Infrastructure 3 and 3.5

Some unanswered question:

This bring other question into my mind, so even if you have HA/DRS still you can have outage situation. Fault tolerance ? how can that help if you messed up routing ? what someone pulled the HBA cable itself ? HA will occur if you have host failure or Service Console down. If you don’t have either of them then HA event will not occur. So we need to plan storage level HA, why don’t VMware provide abilities to take care at storage level HA as well? Yes I know since it involve multiple vendor, but can’t VMware work with them?

Friday, December 4, 2009

NFS: 898: RPC error 13 (RPC was aborted due to timeout) trying to get port for Mount Program (100005)

I was trying to mount NFS datastore on vSphare 4.0 from my netapp sim 7.3 version and I am getting following error message while mounting under vmkernel

WARNING: NFS: 898: RPC error 13 (RPC was aborted due to timeout) trying to get port for Mount Program (100005) Version (3) Protocol (TCP) on Server (x.x.101.124)

[root@xxx ~]# esxcfg-route

VMkernel default gateway is x.x.100.1

[root@xxx ~]# vmkping -D

PING x.x.100.140 (x.x.100.140): 56 data bytes

64 bytes from x.x.100.140: icmp_seq=0 ttl=64 time=0.434 ms

64 bytes from x.x.100.140: icmp_seq=1 ttl=64 time=0.049 ms

64 bytes from x.x.100.140: icmp_seq=2 ttl=64 time=0.046 ms

--- x.x.100.140 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss round-trip min/avg/max = 0.046/0.176/0.434 ms PING x.x.100.1 (x.x.100.1): 56 data bytes

64 bytes from x.x.100.1: icmp_seq=0 ttl=255 time=0.999 ms

64 bytes from x.x.100.1: icmp_seq=1 ttl=255 time=0.837 ms

64 bytes from x.x.100.1: icmp_seq=2 ttl=255 time=0.763 ms

--- x.x.100.1 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss round-trip min/avg/max = 0.763/0.866/0.999 ms [root@xxx]# ping x.x.101.124 PING x.x.101.124 (10.1.101.124) 56(84) bytes of data.

64 bytes from x.1.101.124: icmp_seq=1 ttl=254 time=0.507 ms

64 bytes from x.1.101.124: icmp_seq=2 ttl=254 time=0.554 ms

64 bytes from x.1.101.124: icmp_seq=3 ttl=254 time=0.644 ms

--- x.1.101.124 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1999ms rtt min/avg/max/mdev = 0.507/0.568/0.644/0.060 ms

netappsim1> exportfs

/vol/vol0/home -sec=sys,rw,nosuid

/vol/vol0 -sec=sys,rw,anon=0,nosuid

/vol/vol1 -sec=sys,rw,root=x.x.130.17

Well there are few guidelines which should be followed while mounting NFS datastore on ESX host.

Here the list of requirement

1. Only root should have access to the NFS volume.

2. Only one ESX host should be able to mount NFS datastore.

How do we approach to achieve this ?

1. Create a volume on NetAPP filer choosing one of the aggregate. Once the volume is create we need to export the volume.

2. This can be done from CLI and to do it from CLI

srm-protect> exportfs -p rw= 10.21.64.34,root=10.21.64.34 /vol/vol2

srm-protect> exportfs

/vol/vol0/home -sec=sys,rw,nosuid

/vol/vol0 -sec=sys,rw,anon=0,nosuid

/vol/vol1 -sec=sys,rw=10.21.64.34,root=10.21.64.34

Here something to remember that the IP address 10.21.64.34 is of VMKERNAL which should be created before mounting NFS volume over ESX host.

While creating VMKERNEL ensure that IP should be in same subnet that’s of NFS server or else you will have error above. After changing IP subnet I was able to mount the NFS datastore

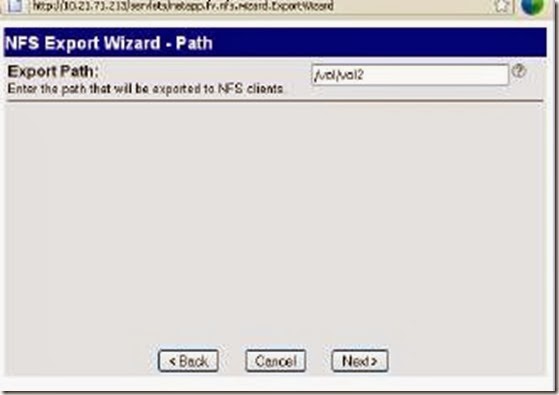

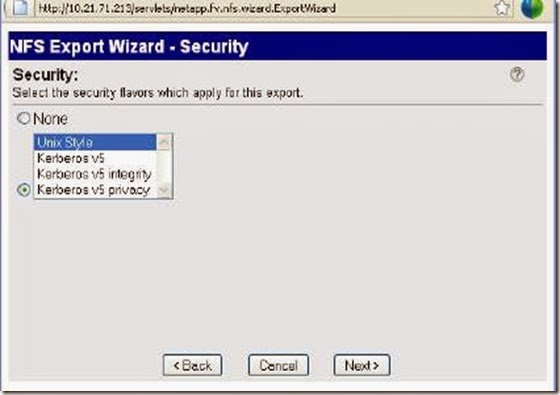

3. From “Filer view ” NFS > Manage Exports:

In the Export Options window, the Read-Write Access and Security

check boxes are already selected. You will need to also select the Root Access

check box as shown here. Then click Next:

Leave the Export Path at the default setting:

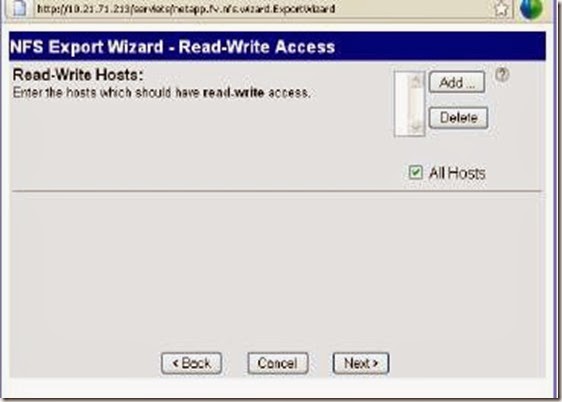

In the Read-Write Hosts window, click on Add to explicitly add a RW host.Here you need to mention IP address of VMKERNEL and not service console of the ESX host or else the rights will not be applied .

Populate the Host to Add with the (VMkernel) IP address:

The Read-Write Hosts window should now include my VMkernel I

address. Click Next to move to the Root Hosts window:

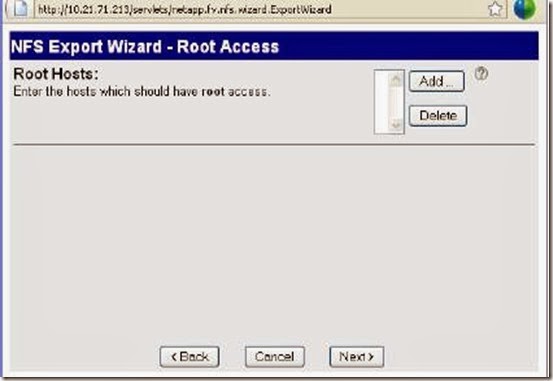

Populate the Root Hosts exactly the same way as the Read-Write

Hosts by clicking on the Add button. This should again be the VMkernel/IP

Storage IP address. When this is populated, click Next:

At the Security screen, leave the security flavour at the default of

Unix Style and click Next to continue:

Wednesday, December 2, 2009

VMware Virtualization Seminar Series 2009 -Bangalore,India

I nominated and got opportunities to attend “VMware Virtualization Seminar Series 2009” held here in Bangalore India on 24th Nov. They arranged this seminar in one of the leading 5 star hotel in Bangalore. Not sure if it was really required because people like me don’t need to have nice 5 star buffet but required 5/6 …. Star quality technical knowledge sharing from such kind of respected events. But I was so much disappointed with this event. I gave VMWare feedback about conducting such event in future. VMware seems to be so much spiritless about event in India. Such events are carried with great pump and show across the world. May be VMware don’t have target audience? But again such events are to make mass aware of Virtualization offering from VMWare and its partner. It’s not like there are no local markets for virtualization but we need to create awareness in local language. You cannot treat Indian business same as other part of the world. When I put question to their “Senior VMWare technical Director” like

1. What are the challenge you have dealing with local CEO’s compare to CEO’ other part of the world?

His answer was not convincing. He told challenges are almost same, I do not agree at all. What I found that CEO are little hesitance in putting initial investment if they are not virtual and Indian CEO’s will more challenging because of VMWare pricing. So my second question was for pricing .

2. Do you think pricing you can make little bit more flexible in India to tap local Indian market? Same way which MS marketing strategies when it comes to pricing ?

His answer was like he cannot make pricing more competitive because of legal issue. It would be really tough

Well My dear VMWare you need to work on pricing when you want to tap Indian market. You need to adopt Indian marketing strategies .

So here are the break up agenda and I opted for Track II – Advance thinking that it would be very informative.

Time | Topic |

8.30 am – 9.30 am | Registration |

9.30 am – 9.45 am | Keynote Address by T Srinivasan |

9.45 am - 10.30 am | The Future of Virtualization: |

10.30 am - 11.15 am | Presentation by EMC |

11.15 am - 11.30 am | Tea Break |

11.30 am - 12.15 pm | Presentation by DELL |

12.15 pm - 1.00 pm | Presentation by HP |

1.00 pm - 2.00 pm | Lunch | |

| BREAKOUT | |

| Track I - Essentials | Track II - Advanced |

2.00 pm - 2.:45 pm | The Virtual Reality - Overview of Fundamental VMware Technologies and Solution Offerings | Scaling your Virtualization Deployments using Architectural Advances in VMware vSphere 4 |

2.45 pm - 3.15 pm | Consolidation + Virtualization + WAN Optimization = True IT Efficiency | From Strategy to Reality - Rapid, Affordable & Automated DR Solutions using VMware SRM |

3.15 pm - 3.45 pm | Pay Less Do More - Realizing the Return on Investment with VMware | Building an agile infrastructure to support cloud-based application delivery by F5 Networks |

3.45 pm - 4.00 pm | Tea Break | |

4.:00 pm - 4.30 pm | Analyze to Automate – the evolution of Vizioncore and how we truly extend VMware’s vSphere Presentation by Vizioncore Inc | The Journey from Servers to Desktop Virtualization - Operationalizing Virtual Desktops |

4.30 pm - 5.00 pm | Practical Approach to Virtualizing your Resource Intensive Tier 1 Applications like Oracle, Exchange, SAP, SQL | Presentation by VMware |

5.00 pm - 5.10 pm | Break | |

5.10 pm - 5.30 pm | Virtualization Competitive Differences - What the vendors aren't telling you | |

5.30 pm - 5.40 pm | Wrap up | |

First of all they have assigned small booth for their partners. There were only few of them and whatever stall I visited I could not think there were any presence of techies out there to answer your queries. Everyone was telling please drop your visiting card and we will get back to you. VMWare both was little informative but again you can only see video and no hands –on .

So the session started with key notes from VMWare MD India and let me tell you it was not at all informative. Over all every key note should be followed by Q & A but he did not offer one. I guess you should give other chance to understand what VMware India thinks ?

Second key note was from Director Technology and again disappointed. Person was not aware what to say in accordance with target audience. Until I know what vSphare is how will I understand its key feature ?

She made interesting announcement though: Long distance vmotion over WAN. Since she did not gave opportunities to ask any Q hence we are still guessing. Currently it is possible now but I am guessing they will add some feature through which WAN link utilization will be optimized during this process, let’s see.

VMWare partner’s presentation where more disappointing . Dell/HP /EMC were trying to sell their product rather than explaining how they will value add to VMWare solution . HP was trying hard to convince that they are on stop shop for all the virtualization solution. I was surprised when speaker told that they also troubleshoot VMware related issue.

I found EMC solution about “Automation of Storage Tiering ” very interesting. What it does is ,it moves VM’s based on performance. Say if I/O intensive then will put on FC drives but if it is less I/O intensive then may be moved to SATA drives. Not sure how it really work but looks like very it would really help where we have combination of disk and using EMC solution.

Second half was really boarding except presentation from “F5 Networks”.

Presentation from VMWare was very very disappointing . Guy was in so much of rush that he started the session much earlier. We have to stand and attend the session for a while and finally they arrange the chair. He was rushing through each slide. Again I cannot blame him alone . It should be organizer sole responsibilities to see that what speaker has to cover. You cannot cover entire vSphare feature in 45 min. It is not at all possible.

SRM presentation again was very very poor. I came to know that they have invited their QA engineer to give presentation. Some demo video did not run during presentation . Again I was expecting some key feature of SRM to be discussed in detail which did not happen.

I though share my success story with the forum (This was the another drawback of this event that they have not invited single customer to share their success story ). I gave feedback like

a. SRM documentation is very poor from VMWare

b. For multi site DR configuration you cannot expect customer to buy VC licenses.

Presentation from NetAPP was again disappointing . Speaker was explaining about all NetAPP feature rather than explaining what VMWare offering NetAPP has. He should have explain feature like de-dupe in detail and benefits when we are using virtualization.

I like the last presentation by VMware technical director where he was comparing marketing by MS and VMWare . Guy had good amount of technical knowledge and does scan internet for latest happening . He explain DPM feature from VMware and MS hyper-v in great way. MS Hyper V Is based on Core parking and how does it differ from VMware ?

Say if you 10 cubical in office and each cubical has 4 person seating .After 6 PM you will have few people in each cubical . So what MS does is start arranging cubical so that each cubical has 4 person after 6 PM and still location electricity will be powered on consuming for all those cubical which are still vacant. But what VMWare does is move all the people into small location or small office and power off the power of entre building . That makes perfect sense when you want to save power .

Lastly it come for lucky draw session where I myself won 8GB Ipod from Vizioncore. Yeah it is much better than Itouch which I have . It got even2 mega pixel camera .

Step by Step: Using VMware vSphare Host Update Utility

1. This install as a part of VC client install. Once it is installed lunch it and click on add host.

2. It will ask for the details of about the host, add it using IP address.

3. Once host is under panel then you will get option “Upgrade Host”

4. Once “Upgrade host” is selected then it will ask for ISO location. Provide ISO location

5. It will ask to oblige with VMWare end user license agreement

6. Provide the root credential

7. It will run validation check

8. It will present all the lun and local storage. Here you can specify where you want to place he upgrade.

9. It will then ask for Post-Upgrade option

10. It will give summary and tell you that it is ready J

11. It will provide the status

Monday, November 23, 2009

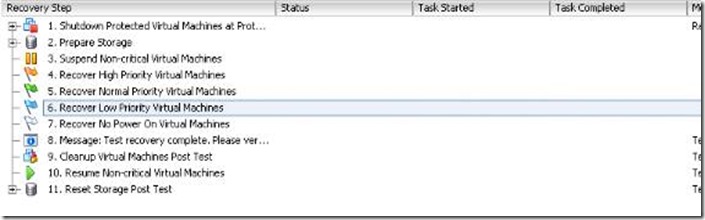

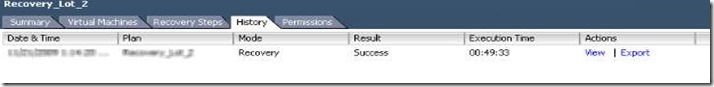

Another Milestone Implementing SRM for Disaster Recovery

There are many FAQ available about SRM installation and configuration . One of them which I like is

http://www.yellow-bricks.com/srm-faq/

I would like to share my own personal experience. On Saturday 21st of Nov 2009 I have tested live DR scenario for our customer readiness .

This involved 26 Key application of our customer running across 17 Physical Database server and 45 virtual machines. We have excluded AD/Exchange. For exchange we used EMS system from Dell.

For SRM setup I used

1. 4 ESX host running on DL380 G5 with few of them has installed Qlogic HBA and other used S/W ISCSI.

2. 4 ESX host were installed with ESX3.5 U4 and both side I was running VC2.5 U5.

3. All the 4 ESX host were configured for HA/DRS.

4. Unfortunately all the 45 VM were spread across 33 lun. I could have consolidated for the SRM prospective.

5. For this purpose I have used SRMV1.0.1 with Patch 1. We are using NetAPP as our storage solution.

6. To save bandwidth we have kept protected and recovery filer at same location . Replicated and then ship it across to DR location. This will help us to save bandwidth for initial replication as we are dependent on incremental replication.

7. We are using “Stretch VLAN “ so that we don’t have to re-ip the machine when it recovered at recovery site.

SRM setup and configuration:

1. Create a service account for SRM ,which will be used for setup SQL database for SRM and will be used during the installation of SRM

2.Install SRM database at each of the Virtual Center on SQL server. I configured DSN prior though during the SRM setup it will prompt.

3.Install all the SRM patch for 1.0 and then install SRA(Storage Replication Adaptor). This Adaptor comes from the vendor whose storage solution you are using. You can download from VMware site only if you login to their account or else they don’t allow open download. In my case I used NetAPP SRA.

4. Once both site Protected Site and Recovery Site had the all the component installed we will start pairing the site. At Recovery site we need to provide Protected site IP and at Protected site we need to provide Recovery Site ip so that it can be paired. Use service account to pair the site.

5.Once the site is paired then we will need configure SRA for Protected site and Recovery site. Before this being configured we should insure that replication is completed . Check with storage admin, or else we can not proceed further with configuration. This replicated also help us to create a bubble VM at recovery site.

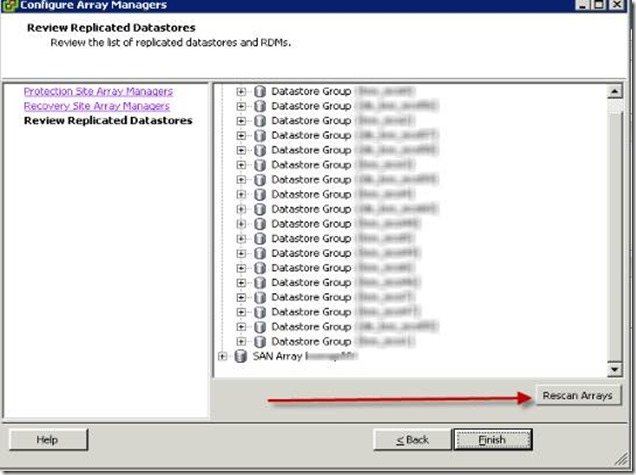

Once it is confirmed that replication is over then configure the Array by running “Configure Array Managers” wizard.

a. For Protected site supply the IP address of the NetAPP filer. Ensure that you uses root or root like credential to authenticate. You must see replicated Array Pairs. If you have many filer which has lun/volume spread across then use the add button to add all those filer

b. Then we will configuring the same for Recovery site. Which all filer are paired ,insure that you add all the pair filer at the recovery site as well. Use the root/root like credential to add it . You can keep adding all the recovery site filer IP and then you will see green icon which states that Array has been paired properly

c. Once Protected Site and Recovery Site has been paired using replication array then we can find all the replicated datastores. If not

visible then rescan the array

After setup at Protected Site we need to do the same at Recovery Site. Protected Site and Recovery Site IP will be remain as we configured at Protected Site. You won’t be able to see Replicated Datastores at the Recovery Site as we are not configuring two way recovery site.

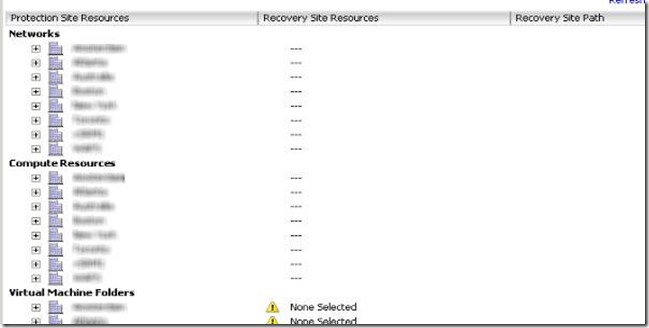

6. Now we need to configure the Inventory mapping at “Protected Site” .

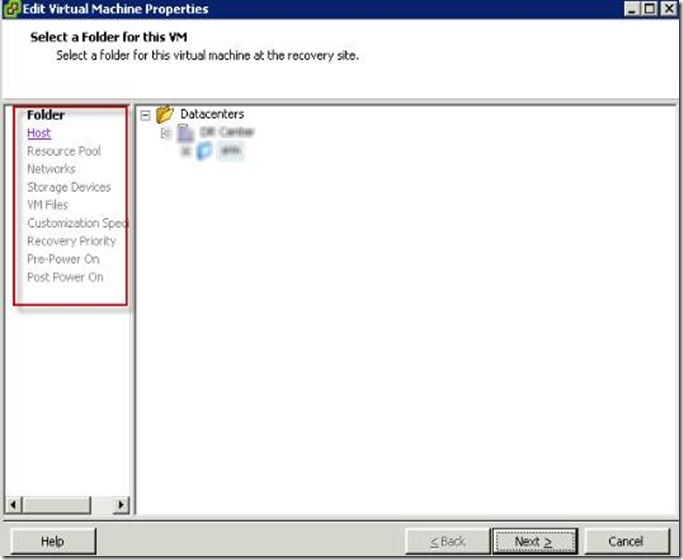

This is very crucial and critical setup at Protected Site. Before we do inventories mapping we should consolidate all the VM into single folder by name something like SRM at Protected site. Create similar folder at Recovery site so that we can have one to one mapping. We also need to make sure that if we are doing mapping for “Computer Resource” for Cluster host . It should have HA/DRS enabled or it will NOT allow to create bubble VM.

Assuming that we are using stretched VLAN and we have those VLAN created at Recovery Site.

This step is not required at Recovery Site.

7. We now need to create Protection Group at Protected site and does not require at Recovery site.

This protection group based each individual LUN and which VM’s on those lun needs to be protected. One LUN can be part of one protected group and cannot be mapped to any other protection group. Hence it is very important we need to classify the lun based on HIGH/NORMAL/LOW categories. Similarly we can plan for mapping. If we have done inventory mapping correctly then status will be shown as configured for protection

We can also run through wizard for configuring for each VM. Here we can configure for priories of startup as low/normal/high based on which VM should be started first.

If the replication is over then you can also see the status on Storage devices.

Also make a note that at Protected Site there should not any CD-ROM connection or Floppy connected or else automatic configuration will fail.

8. Once we have all the steps configured as above we can start with setup at of Recovery Plan @Recovery site. This recovery plan can be run in two mode test and recovery mode. Test mode is to insure that you actual recovery run as per requirement. This runs using flex Clone (Incase of NetAPP storage)lun and recovery bubble network. We need to ensure license for FlexClone at Recovery site filer. These test does not impact the Production system. After the test all the VM’s are powered off and LUN’s are resyched. Here we can move the VM in the priorities list to make sure it start first and then next VM and so on .

9. In the actual recovery mode it does attach the lun and then start powering on VM from high to low. Once this is overy you can export the report by clicking history

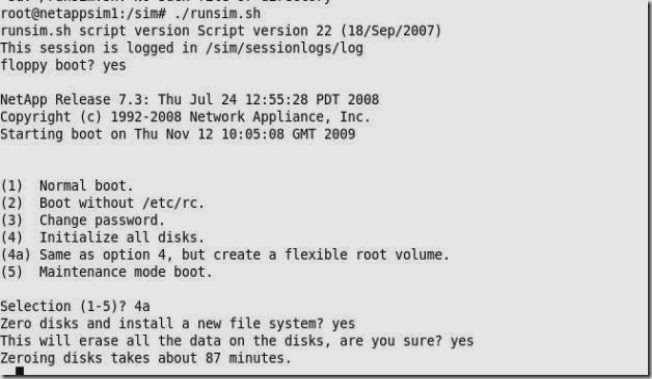

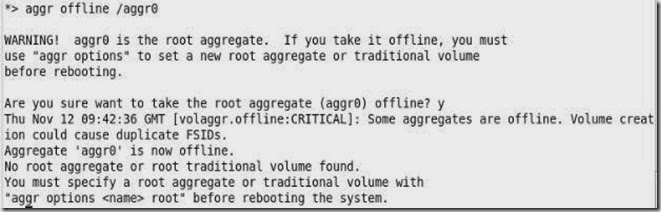

PANIC: SWARM REPLAY: log update failed

We have trying to implement SRM and I setup NetAPP 7.3 simulator on Ubantu . During the setup Netapp assign only 3 disk of size .

These agrr is intall by default when simulator is installed. These are 120MB*3 disk. Make sure when the installation is completed you add extra disk to the aggr or else you will land up situation which I have stated above.

When you get above error boot the system into maintenance mode and then create a new root volume. To get into maintenace mode re-run the setup and then “floppy boot” to Yes. And also 4a will intialize all the disks and you will loose data.

I have booted the system into maintenance mode and set the aggr0 (root volume) offline

Now create the new root volume with following command

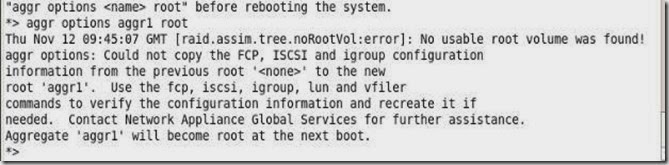

Reboot the system and run ./runsim.sh . If you have followed all the steps then you should be able to get sim online. I got following error message as I did not followed 4a thing.

root@netappsim1:/sim# ./runsim.sh

runsim.sh script version Script version 22 (18/Sep/2007)

This session is logged in /sim/sessionlogs/log

NetApp Release 7.3: Thu Jul 24 12:55:28 PDT 2008

Copyright (c) 1992-2008 Network Appliance, Inc.

Starting boot on Thu Nov 12 14:30:07 GMT 2009

Thu Nov 12 14:30:15 GMT [fmmb.current.lock.disk:info]: Disk v4.18 is a local HA mailbox disk.

Thu Nov 12 14:30:15 GMT [fmmb.current.lock.disk:info]: Disk v4.17 is a local HA mailbox disk.

Thu Nov 12 14:30:15 GMT [fmmb.instStat.change:info]: normal mailbox instance on local side.

Thu Nov 12 14:30:16 GMT [raid.vol.replay.nvram:info]: Performing raid replay on volume(s)

Restoring parity from NVRAM

Thu Nov 12 14:30:16 GMT [raid.cksum.replay.summary:info]: Replayed 0 checksum blocks.

Thu Nov 12 14:30:16 GMT [raid.stripe.replay.summary:info]: Replayed 0 stripes.

Replaying WAFL log

.........

Thu Nov 12 14:30:20 GMT [rc:notice]: The system was down for 542 seconds

Thu Nov 12 14:30:20 GMT [javavm.javaDisabled:warning]: Java disabled: Missing /etc/java/rt131.jar.

Thu Nov 12 14:30:20 GMT [dfu.firmwareUpToDate:info]: Firmware is up-to-date on all disk drives

Thu Nov 12 14:30:20 GMT [sfu.firmwareUpToDate:info]: Firmware is up-to-date on all disk shelves.

Thu Nov 12 14:30:21 GMT [netif.linkUp:info]: Ethernet ns0: Link up.

Thu Nov 12 14:30:21 GMT [rc:info]: relog syslog Thu Nov 12 13:50:59 GMT [sysconfig.sysconfigtab.openFailed:notice]: sysconfig: table of valid configurations (/etc/sys

Thu Nov 12 14:30:21 GMT [rc:info]: relog syslog Thu Nov 12 14:00:00 GMT [kern.uptime.filer:info]: 2:00pm up 2:09 0 NFS ops, 0 CIFS ops, 0 HTTP ops, 0 FCP ops, 0 iS

Thu Nov 12 14:30:21 GMT [httpd.servlet.jvm.down:warning]: Java Virtual Machine is inaccessible. FilerView cannot start until you resolve this problem.

Thu Nov 12 14:30:21 GMT [sysconfig.sysconfigtab.openFailed:notice]: sysconfig: table of valid configurations (/etc/sysconfigtab) is missing.

Thu Nov 12 14:30:21 GMT [snmp.agent.msg.access.denied:warning]: Permission denied for SNMPv3 requests from root. Reason: Password is too short (SNMPv3 requires at least 8 characters).

Thu Nov 12 14:30:22 GMT [mgr.boot.disk_done:info]: NetApp Release 7.3 boot complete. Last disk update written at Thu Nov 12 14:21:08 GMT 2009

Thu Nov 12 14:30:22 GMT [mgr.boot.reason_ok:notice]: System rebooted after power-on.

Thu Nov 12 14:30:22 GMT [perf.archive.start:info]: Performance archiver started. Sampling 20 objects and 187 counters.

Check if jave is disabled

filer> java

Java is not enabled.

If java is not enabled then FilerView wont work you need to re-install the simulator image.

I will explain about simulator reinstall using NFS /CFS once I tested it. Keep following my blog

Thursday, November 12, 2009

SnapShot with RDM

I told yes you can but depends which mode you have added RDM . It is not possible at all to take snapshot of a RDM in physical mode Only in Virtual mode

Find out more here

Setup quick Webserver

2. Install it on C:/mongoose

3. Go to C:/mongoose and double click on mongoose.exe to start the Webserver.

Thats it...

Now keep the folder which you wants to access over the http into C:/ . If you browse http://localhost:8080 then you should able to see content of the C:/ from

the browser.

Note : By default the mongoose Web Root folder will be set to c:\ you can modify it of your own folder.

Monday, November 9, 2009

I am now VMware Certified Professionla on vSphere 4

I have referred to VCP410 what’s new Student manual and configure maximum for VCP410. Sometimes I failed to understand regarding testing pattern. For example out of 4 answers only 2 will be correct as per VMware and we are sure that 3 of them are correct. But VMware will only accept the two which they think is best. I guess they should add intelligence to their system database and also allow accepting 3rd answer.

Wednesday, November 4, 2009

How to perform manual DR using VMWare and NetAPP?

We used SNAP mirror technologies from NetAPP to accomplish “Plan B”

Here is what I suggested:

1. During initial setup snap mirror on LAN and then once it is completed plan to ship the filer to DR location. This way we can save some bandwidth for replication as it will be only changes which will get replicated.

2. With manual process we need to maintain few documents especially with all the luns which will get replicated across location along with the serial number. The reason will be explained below

3. Once the replication is over we will start preparing for DR testing. For testing purpose I have selected one ESX host with dummy VLAN’s

4. I broke the snap mirror relationship between filer for the volume which was interested in testing. Once the volume is broken it become active and all luns are visible on the filer. It will be with same lun name and lun number . But the serial number will be changes.

5. This being very scariest part of entire exercise . If the lun serial number do not match with that of primary site then lun will appear as blank lun. We need to one to one mapping for lun serial number as it should be matching with that of protected site.

Before you change the lun serial number at the recovery site we need to make the lun offline and then run following command to change the serial number

# lun serial

Eg: lun serial /vol/S_xxxx_011PP_vol1/lun1 12345

6. Once it has same serial number map the lun to correct igroup and rescan hba on ESX host. Once the rescan is completed all the lun and datastore will appear “AS IT IS ” at the recovery site.

7. We have to register all the VM in order to power on . This can be accomplished using script.

Happy DR.

Sunday, October 25, 2009

VSRM2:Network Consideration

Then Man behind this effort was network colleague Randall Bjorge.

1. What we did is we created same VLAN at Recovery site as in Protected site. For example say if VLAN100 exist in Protected site then we also created VLAN 100 at the Recovery Site

2. Same VLAN created at Recovery site has been keep in shutdown state.

3. When we initiated actual DR for our test environment we shut down the VLAN at Protected site.

4. We then brought VLAN at Recovery Site and pressed the RED recovery button.

To simplified this process we scripted this VLAN shutdown and VLAN bring up process. When we brought VLAN at Recovery site we had some challenges in routing network.

I expected this process to be very tough but when we worked it turned out to be very simple and straight. In actual DR scenario I am planning to place a DC/DNS/DHCP to make this IP routing simple.

Friday, October 23, 2009

Storage VMotion myth and deep dive

I had been involved so heavily in storage Vmotion that I decided to write about it. Nice source is here

First of all storage vmotion is

- Takes an ESX snapshot

- Then file copies the closed VDMK (which is the large majority of the VM) to the target datastore

- Then "reparents" the open (and small) VDMK to the target (synchronizing)

- Then "deletes" the snapshot (in effect merging the large VDMK with the small reparented VMDK)

Myth and deepdive:

- In a cluster if you have 5 host and have 10 luns. If you want to svmotion to 11th lun, the 11th lun should be visible to all the host in the cluster. It does not work on individual host

- You can do 1 svmotion per ESX host and maximum of 32 per cluster (Pheew)

- You can have 4 svmotion per lun . Which means you have many source lun but at a time only 4 svmotion will done at any target lun

- Storage vmotion can happen be only between on datacenter and cannot happen between two different datacenter (phewee).

- Storage VMotion cannot be used on a virtual machine with NPIV enabled

Batch Storage Vmotion

I had to do batch svmotoion and I decided to follow this guy nice article

Place postie.exe (Freeware), AutoSVmotion.vbs (Contents at then ) in the Remote CLI (Download and install it from VMWare site) bin directory (default location is "C:\Program Files\VMWare\VMWare VI Remote CLI\Bin"). Also create a batch file in this directory, this will be used to call AutoSVMotion.vbs. In the batch file put all the VM's that you require migrating in the below format:

cscript.exe AutoSVmotion.vbs %VC% %Username% %Password% %Datacentre% %SourceDatastore% %VMXFilePath% %DestDatastore% %SMTPServ% %ToEmailAddress% %FromEmailAddress%

Example of the batch file:

cscript.exe AutoSVmotion.vbs VC01 Admin P@ssw0rd London OLDLUN1 VM1/VM1.vmx NewLUN1 SMTP01 virtuallysi@example.com vikash.roy@test.com

cscript.exe AutoSVmotion.vbs VC01 Admin P@ssw0rd London OLDLUN1 VM2/VM2.vmx NewLUN1 SMTP01 virtuallysi@example.com vikash.roy@test.com

Example of the email output:

From: vikash.roy@test.com

Date: 13 September 2009 08:45:43 GMT+01:00

To: virtuallysi@example.com

Subject: SVMotion Progress Report

Successfully migrated VM1/VM1.vmx

Contents of AutoSVmotion.vbs

Dim WshShell, oArgs, sVCServer, sUsername, sPassword, sDatacenter, sSourceStore, sVMXLocation,_

sDestStore, sSMTPServer, sEmailAddress, sSVMotioncmd, iRetVal, sEMailText, sEmailCmd

On Error Resume Next

Set WshShell = WScript.CreateObject("WScript.Shell")

Set oArgs = WScript.Arguments

sVCServer = oArgs(0)

sUsername = oArgs(1)

sPassword = oArgs(2)

sDatacenter = oArgs(3)

sSourceStore = oArgs(4)

sVMXLocation = oArgs(5)

sDestStore = oArgs(6)

sSMTPServer = oArgs(7)

sToEmailAddress = oArgs(8)

sFromEmailAddress = oArgs(9)

sSVMotioncmd = "cmd.exe /c svmotion.pl --url=https://" & sVCServer & "/sdk --username=" & sUsername & _

" --password=" & sPassword & " --datacenter=" & sDatacenter & " --vm=" & Chr(34) & "[" & sSourceStore & "] " & sVMXLocation &_

":" & sDestStore & Chr(34)

WScript.Echo sSVMotioncmd

iRetVal = WshShell.Run(sSVMotioncmd, 1, True)

If iRetVal = 0 Then

sEMailText = "Successfully migrated " & sVMXLocation

sEmailCmd = "cmd.exe /c postie.exe -host:" & sSMTPServer & " -to:" & sToEmailAddress & " -from:" & sFromEmailAddress & " -s:" & Chr(34) & "SVMotion Progress Report" & Chr(34) & " -msg:" & Chr(34) & sEMailText & Chr(34)

Else

sEMailText = "SVmotion failed for " & sVMXLocation & " with error number " & iRetVal

sEmailCmd = "cmd.exe /c postie.exe -host:" & sSMTPServer & " -to:" & sToEmailAddress & " -from:" & sFromEmailAddress & " -s:" & Chr(34) & "SVMotion Progress Report" & Chr(34) & " -msg:" & Chr(34) & sEMailText & Chr(34)

End If

WshShell.Run sEmailCmd, 1, True

Tuesday, October 13, 2009

How to validate VMWare license ?

http://www.vmware.com/checklicense/ . Click validate and this your license will be validated.

This will bring the page with following message

Welcome to the license file checking utility. This tool will take a pasted license file and parse, reformat, and attempt to repair it. It will also give you statistics regarding the total number of licenses found and highlight inconsistencies that could potentially cause issues.

Currently, this utility only handles "server-based" license files (used with VirtualCenter). Host-based licenses that stand-alone on a single server are not supported at this time.

Saturday, October 10, 2009

Working with BMC and vif on FAS2020

We had a situation with FAS2020 where we had to connect two interface e0a/e0b has to be assigned two separate IP but when NetAPP engineer commissioned FAS2020 ,they created vif and assigned both the physical interface e0a and e0b to it.

This is how back panel of FAS2020 looks like

The circled one is basically for BMC which is similar to ILO

FAS2020 have two Ethernet interface .

So I had a challenge to delete vif for FAS2020. It’s like virtual interface binded to two physical NIC and filer view can be accessed using vif . So we cannot make changes to vif using filer view . This needs to be accomplish either using serial interface or BMC(Similar to ILO which provide console access)

Couple of facts about BMC which I learned (remember I am learning NetAPP)

1. BMC has IP address and you need to do SSH to the IP not telnet.

2. For user ID you need to use “naroot” and password will be that of root.

3. Once you login to BMC console then you can login to system console using root and then root password

This is how to execute the command

login as: naroot

naroot@xx.0.86's password:

=== OEMCLP v1.0.0 BMC v1.2 ===

bmc shell ->

bmc shell ->

bmc shell -> system console

Press ^G to enter BMC command shell

Data ONTAP (xxxfas001.xxx.net)

login: root

Password:

xxxfas001> Fri Oct 9 11:08:06 EST [console_login_mgr:info]: root logged in from console

xxxfas001> ifconfig /all

ifconfig: /all: no such interface

xxxfas001> ifconfig

usage: ifconfig [ -a | [ <interface>

[ [ alias | -alias ] [no_ddns] <address> ] [ up | down ]

[ netmask <mask> ] [ broadcast <address> ]

[ mtusize <size> ]

[ mediatype { tp | tp-fd | 100tx | 100tx-fd | 1000fx | 10g-sr | auto } ]

[ flowcontrol { none | receive | send | full } ]

[ trusted | untrusted ]

[ wins | -wins ]

[ [ partner { <address> | <interface> } ] | [ -partner ] ]

[ nfo | -nfo ] ]

xxxfas001> ifconfig -a

e0a: flags=948043<UP,BROADCAST,RUNNING,MULTICAST,TCPCKSUM> mtu 1500

ether 02:a0:98:11:64:24 (auto-1000t-fd-up) flowcontrol full

trunked svif01

e0b: flags=948043<UP,BROADCAST,RUNNING,MULTICAST,TCPCKSUM> mtu 1500

ether 02:a0:98:11:64:24 (auto-1000t-fd-up) flowcontrol full

trunked svif01

lo: flags=1948049<UP,LOOPBACK,RUNNING,MULTICAST,TCPCKSUM> mtu 9188

inet 127.0.0.1 netmask 0xff000000 broadcast 127.0.0.1

svif01: flags=948043<UP,BROADCAST,RUNNING,MULTICAST,TCPCKSUM> mtu 1500

inet xx.xxx.xx.xx netmask 0xffffff00 broadcast xx.xx.xx.255

ether 02:a0:98:11:64:24 (Enabled virtual interface)

xxxfas001> ifconfig svif01 down

xxxfas001> Fri Oct 9 11:10:23 EST [pvif.vifConfigDown:info]: svif01: Configured down

Fri Oct 9 11:10:23 EST [netif.linkInfo:info]: Ethernet e0b: Link configured down.

Fri Oct 9 11:10:23 EST [netif.linkInfo:info]: Ethernet e0a: Link configured down.

xxxfas001> Fri Oct 9 11:10:37 EST [nbt.nbns.registrationComplete:info]: NBT: All CIFS name registrations have completed for the local server.

xxxfas001> vif destory svif01

vif: Did not recognize option "destory".

Usage:

vif create [single|multi|lacp] <vif_name> -b [rr|mac|ip] [<interface_list>]

vif add <vif_name> <interface_list>

vif delete <vif_name> <interface_name>

vif destroy <vif_name>

vif {favor|nofavor} <interface>

vif status [<vif_name>]

vif stat <vif_name> [interval]

xxxfas001> vif desto4~3~ry svif01

xxxfas001> vif destroy svif01

xxxfas001> if config -a

if not found. Type '?' for a list of commands

xxxfas001> ifconfig -a

e0a: flags=108042<BROADCAST,RUNNING,MULTICAST,TCPCKSUM> mtu 1500

ether 00:a0:98:11:64:24 (auto-1000t-fd-cfg_down) flowcontrol full

e0b: flags=108042<BROADCAST,RUNNING,MULTICAST,TCPCKSUM> mtu 1500

ether 00:a0:98:11:64:25 (auto-1000t-fd-cfg_down) flowcontrol full

lo: flags=1948049<UP,LOOPBACK,RUNNING,MULTICAST,TCPCKSUM> mtu 9188

inet 127.0.0.1 netmask 0xff000000 broadcast 127.0.0.1

xxxfas001> ifconfig

usage: ifconfig [ -a | [ <interface>

[ [ alias | -alias ] [no_ddns] <address> ] [ up | down ]

[ netmask <mask> ] [ broadcast <address> ]

[ mtusize <size> ]

[ mediatype { tp | tp-fd | 100tx | 100tx-fd | 1000fx | 10g-sr | auto } ]

[ flowcontrol { none | receive | send | full } ]

[ trusted | untrusted ]

[ wins | -wins ]

[ [ partner { <address> | <interface> } ] | [ -partner ] ]

[ nfo | -nfo ] ]

xxxfas001> ifconfig e0a xx.xx.xx netmask 255.255.255.0

xxxfas001> Fri Oct 9 11:17:24 EST [netif.linkUp:info]: Ethernet e0a: Link up.

xxxfas001> ifconfig e0a xx.xx.xx.xx netmask 255.255.25Fri Oct 9 11:17:50 EST [nbt.nbns.registrationComplete:info]: NBT: All CIFS name registrations have completed for the local server.

5.0

xxxfas001> ifconfig e0a xx.xx.xx.xx netmask 255.255.2

xxxfas001> ifconfig -a

e0a: flags=948043<UP,BROADCAST,RUNNING,MULTICAST,TCPCKSUM> mtu 1500

inet xx.xx.xx.xx netmask 0xffffff00 broadcast xx.xx.xx.255

ether 00:a0:98:11:64:24 (auto-1000t-fd-up) flowcontrol full

e0b: flags=108042<BROADCAST,RUNNING,MULTICAST,TCPCKSUM> mtu 1500

ether 00:a0:98:11:64:25 (auto-1000t-fd-cfg_down) flowcontrol full

lo: flags=1948049<UP,LOOPBACK,RUNNING,MULTICAST,TCPCKSUM> mtu 9188

inet 127.0.0.1 netmask 0xff000000 broadcast 127.0.0.1

xxxfas001> Fri Oct 9 11:20:03 EST [nbt.nbns.registrationComplete:info]: NBT: All CIFS name registrations have completed for the local server.

Fri Oct 9 11:21:16 EST [netif.linkInfo:info]: Ethernet e0b: Link configured down.

Fri Oct 9 11:21:29 EST [netif.linkUp:info]: Ethernet e0b: Link up.

Here you can find after changes how the ISCSI connection has been established.

Fri Oct 9 11:21:38 EST [iscsi.notice:notice]: ISCSI: New session from initiator iqn.2000-04.com.qlogic:qle4062c.lfc0908h84979.2 at IP addr 192.168.0.2

Fri Oct 9 11:21:38 EST [iscsi.notice:notice]: ISCSI: New session from initiator iqn.2000-04.com.qlogic:qle4062c.lfc0908h84979.2 at IP addr 192.168.0.2

Fri Oct 9 11:22:16 EST [nbt.nbns.registrationComplete:info]: NBT: All CIFS name registrations have completed for the local server.